Show Notes

As engineers, we often try to visualize what our projects will look like when finished. We envision how a roadway or traffic project would fit into the surrounding environment and how cars, pedestrians, and cyclists would interact. We imagine how a new treatment cell would fit within an existing treatment plant. While each of us has a vision for the projects we design, often, one of the biggest challenges we face is sharing that vision with a client, a regulatory agency, or the public to secure their support and move the project forward. At RK&K, we have long relied on our engineers and graphic designers to develop displays, renderings, and even video simulations to help share our vision with others. More recently, we have implemented another approach which fully immerses the audience — allowing them to truly experience the finished project early in the planning and design stage.

RK&K’s Graphics and Innovative Technologies teams are leading an initiative in the use of Virtual Reality (VR) to support the projects we design and implement. Led by Innovative Technologies Manager Tom Earp, GISP and Senior 3D Specialist JP Chevalier, RK&K has developed capabilities to develop fully-rendered 3D environments for use in client presentations, stakeholder engagement meetings, and larger public forums. These videos can also be made available via the web, through YouTube and on project and client websites. While RK&K currently uses Oculus Quest headsets, the videos created would be viewable in most virtual reality headsets and are also viewable on a desktop with ability to rotate the view the full 360 degrees.

Seemingly small details, such as matching the terrain along the horizon can add to realism of the environment for the user.

The work still begins with our engineers, designers, and scientists. The realism of the environment is enhanced when our Graphics team imports design elements from CADD into their software tools. JP helps explain the process: “The proposed VR environment is modeled using the 2D CADD design information as a base to build upon. Roads, curbs, sidewalks, signs, traffic signals, etc. are all modeled in 3D space according to their corresponding 2D design information. The resulting 3D surfaces then have various site-specific materials applied.” The project team can assist JP by having examples of local features (e.g. signal poles, sign structures, light poles) ready and available to be modeled in the 3D world. Additional realism can be accomplished by modeling specific structures like homes, businesses, and places of worship to provide more landmarks for the user.

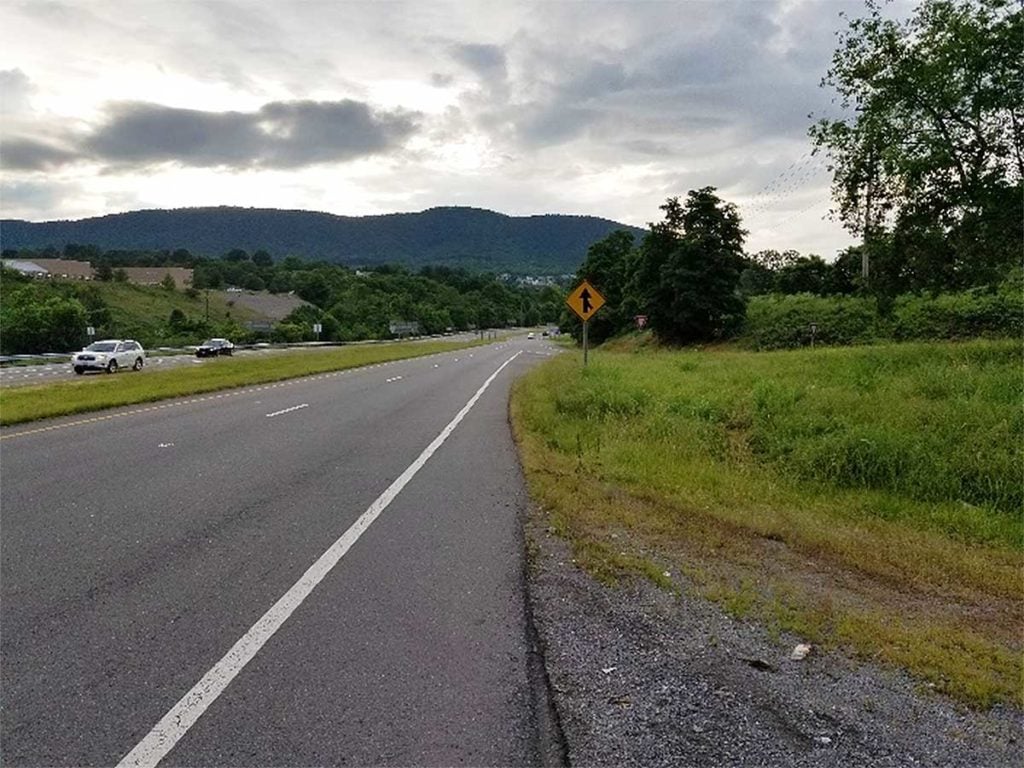

Seemingly small details, such as matching the terrain along the horizon can add to realism of the environment for the user. Terrain data can be taken from a detailed survey or from available GIS contour data to create a 3D environment. In the example below, the distinctive outline of Read Mountain is readily apparent in the virtual reality experience.

Snapshot of the mountains in the background for the US 220 project.

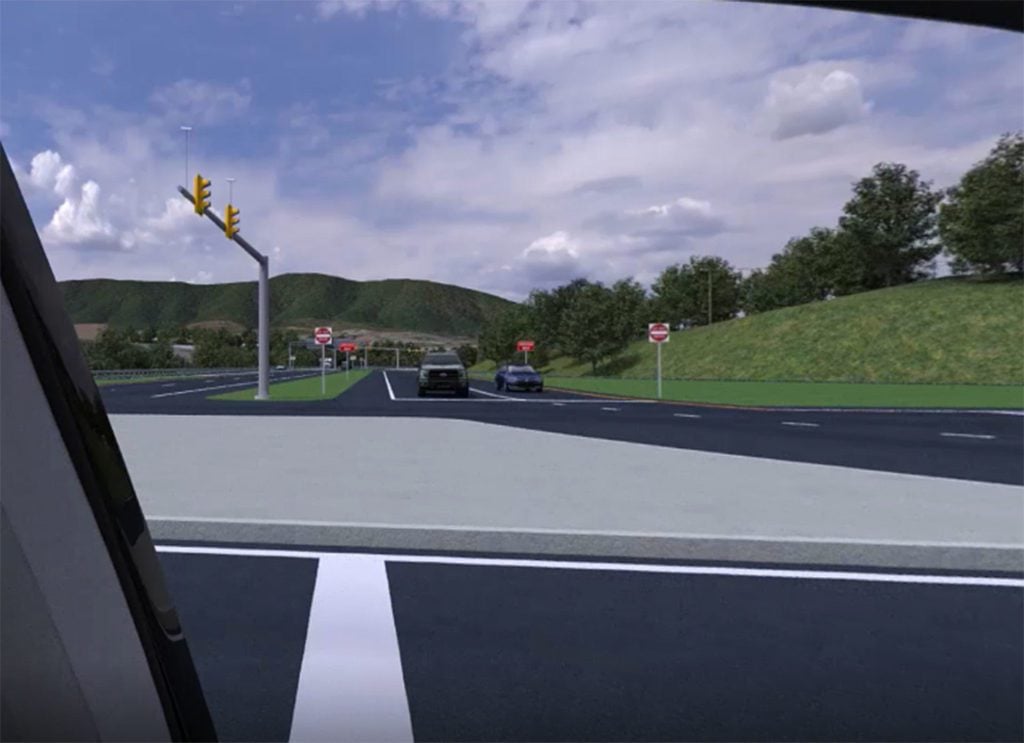

A ground level view in the virtual environment from the same location.

When addressing the most challenging aspect of creating an immersive 360-degree VR experience, JP says the virtual environment “needs to be developed with the realization that the end-user can look in any direction at-will. With a typical fixed-view animation, time can be saved by only modeling what will be viewed along a pre-programmed path. In the virtual reality environment, there are no short cuts. Every site feature must be fully modeled and complete to keep the user immersed in the simulation.” Once the work is done, the waiting begins. Rendering a 3D virtual environment takes four times longer than a corresponding fixed-view style animation–meaning the schedule must be set early and allow sufficient time for edits and re-rendering the video. For a recent project, nearly 40 hours were required to complete rendering of the video.

Being able to place a citizen “inside the car” via a VR experience, and drive them through the intersection to truly understand how it would operate, can be invaluable.

Looking ahead, we anticipate the use of these technologies to become more common as our clients and the public require increased understanding of the improvements they are being asked to support. On the transportation side, we are seeing an increased use of innovative intersections. These configurations are unfamiliar to drivers, and often, that unfamiliarity leads to opposition. Being able to place a citizen “inside the car” via a VR experience, and drive them through the intersection to truly understand how it would operate, can be invaluable.

There are also tools available to make these experiences even more interactive. Tom has been working on a prototype driving simulator. He says, “Using Unity [gaming engine] and the Oculus Quest, we have developed a proof-of-concept driving simulator. The user controls the vehicle’s steering, acceleration, and braking with the Oculus Touch controllers and can drive through the realistic 3D environment. Future iterations will include steering wheel and pedal support, as well as additional vehicles on the road controlled through Artificial Intelligence.” While this may seem far-fetched, we are always looking ahead for opportunities to further engage the public and our clients and to help them understand the importance of the projects we are working on.